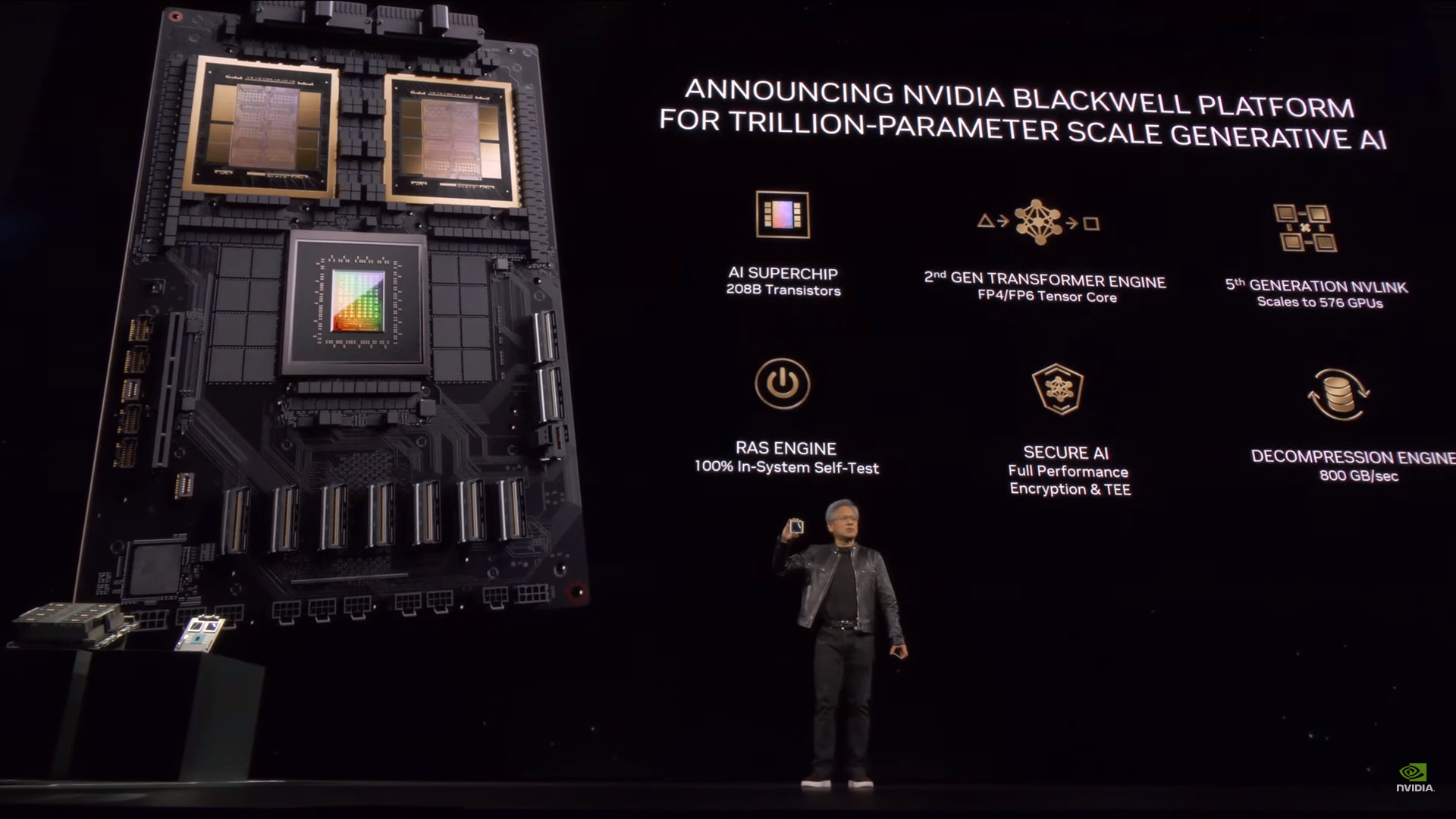

- Describing Blackwell as a groundbreaking AI super chip, Ian Buck, Nvidia’s Vice President of High-Performance Computing and Hyperscale, heralds its arrival as a game-changer in the world of technology. Paying homage to the pioneering mathematician David Harold Blackwell, the namesake behind the GPU architecture, Nvidia honors his legacy as the first Black inductee into the prestigious U.S. National Academy of Sciences.

- Blackwell represents a significant advancement,” remarks Buck. He added to his remark, that this new architecture introduces units capable of conducting matrix math operations with floating-point numbers as narrow as 4 bits. Moreover, it empowers the GPU to make nuanced decisions regarding the deployment of these units, allowing for their utilization across segments of each neural network layer, as opposed to the rigid allocation observed in Hopper. “Achieving such fine-grained granularity is nothing short of miraculous.”

During Nvidia’s highly anticipated developer conference, GTC 2024, the curtains were drawn back to unveil their latest technological marvel: the B200 GPU. This powerhouse is not just an upgrade; it’s a quantum leap in performance and efficiency. With the capability to deliver four times the training performance and a staggering 30 times the inference performance compared to its predecessor, the Hopper H100 GPU, the B200 is set to redefine the boundaries of computational prowess.

Nvidia’s groundbreaking H100 AI chip propelled the company into the realm of multitrillion-dollar valuation, positioning it potentially above tech giants like Alphabet and Amazon. Competitors have been in hot pursuit, striving to close the gap. However, Nvidia’s lead may be on the brink of extension with the introduction of the new Blackwell B200 GPU and GB200 “superchip.”

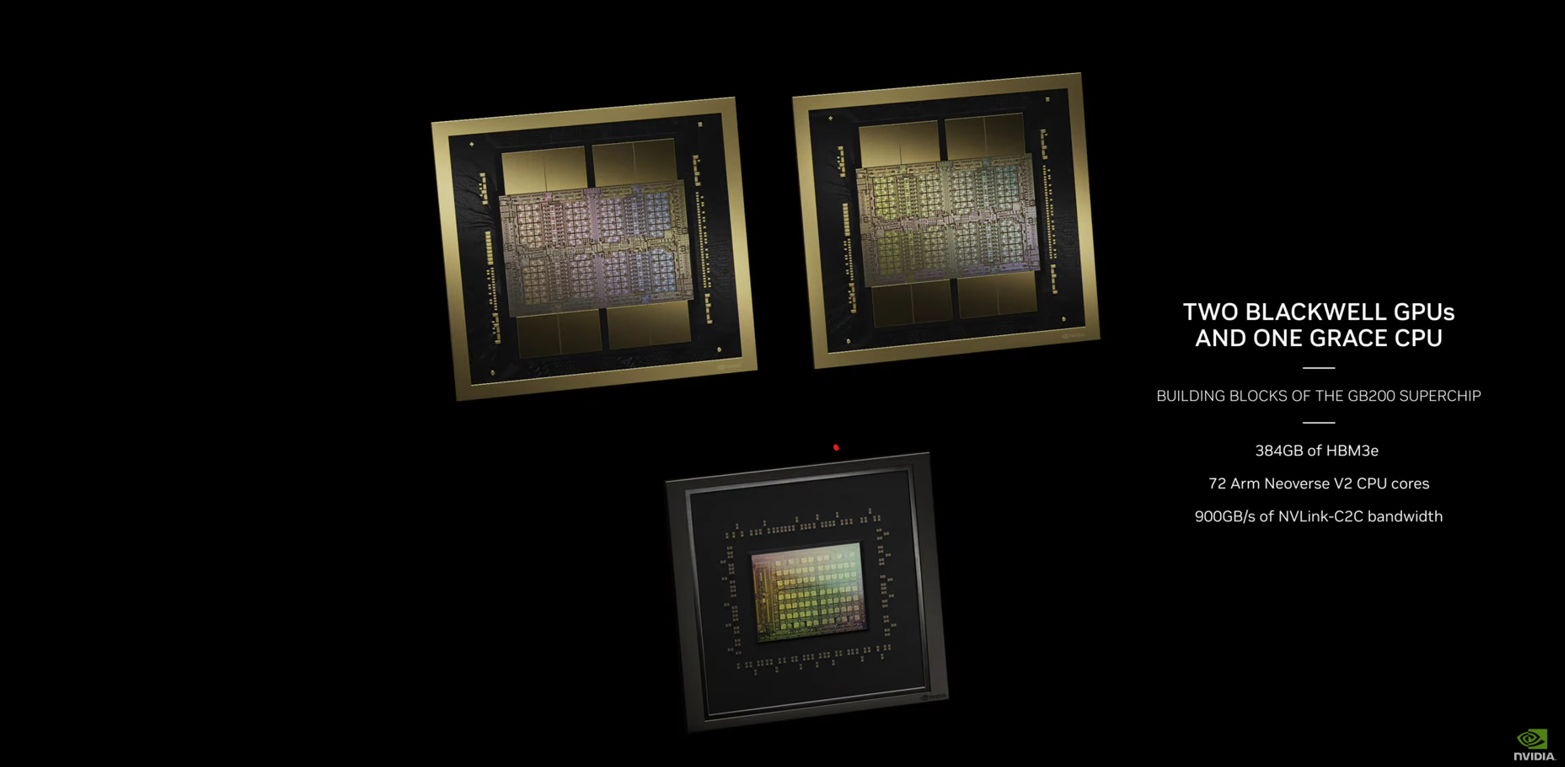

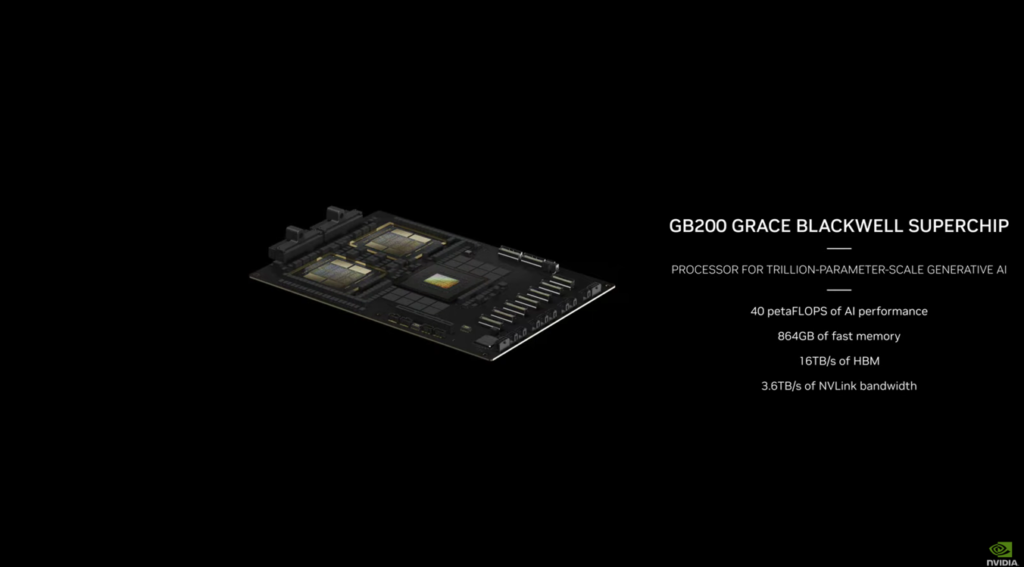

According to Nvidia, the B200 GPU boasts an impressive 20 petaflops of FP4 horsepower, powered by its staggering 208 billion transistors. Additionally, Nvidia asserts that the GB200, a fusion of two B200 GPUs and a single Grace CPU, delivers a monumental 30-fold performance boost for LLM inference workloads, while potentially achieving significantly enhanced efficiency. Nvidia claims this advancement can slash costs and energy consumption by up to 25 times compared to the H100. However, there remains a question mark surrounding the actual cost, as Nvidia’s CEO has hinted at a price range of $30,000 to $40,000 per GPU.

But that’s not all—Nvidia’s innovation doesn’t stop there. With up to 25 times better energy efficiency, the B200 is not just about raw power; it’s about sustainability and eco-friendliness in computing.

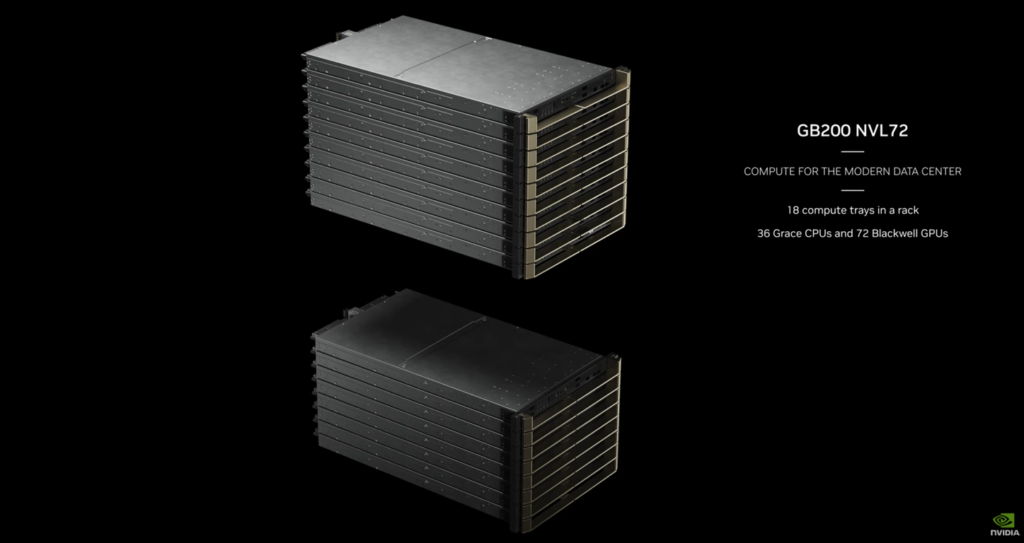

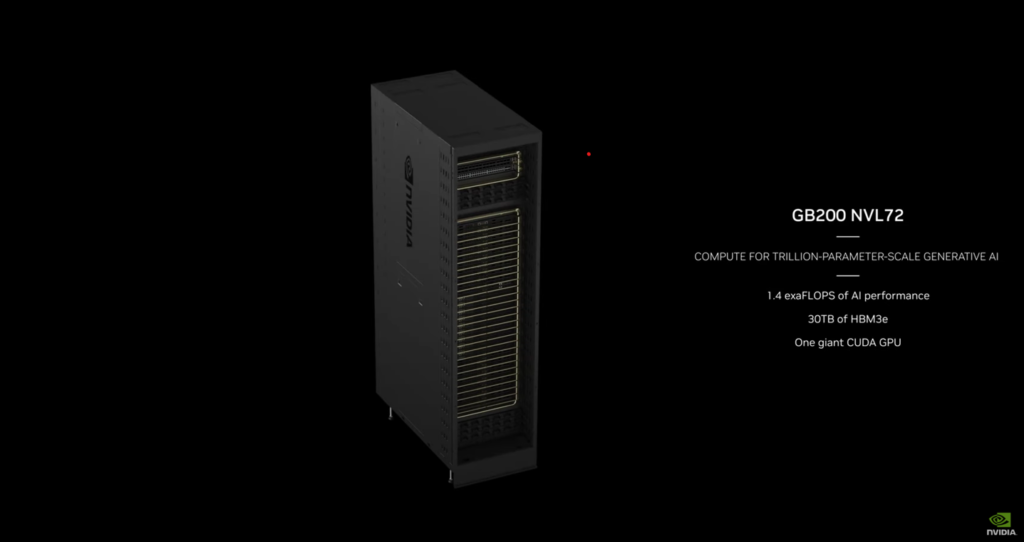

Built on the groundbreaking Blackwell architecture, this GPU is not just a standalone entity—it’s a catalyst for a new generation of computing. Paired with Nvidia’s Grace CPUs, it forms the backbone of the next evolution in computing—the DGX SuperPOD. This formidable combination is capable of handling up to a mind-boggling 11.5 billion billion floating-point operations (exaflops) of AI computing.

B200: More than a chip, it’s an innovation symphony shaping our tech-driven future

The B200 introduces a new frontier in precision with its low-precision number format, pushing the boundaries of what’s possible in AI computing. With the B200 GPU leading the charge, Nvidia is ushering in a new era of computing—one where performance, efficiency, and innovation converge to shape the future of technology.

The B200 is more than just a chip; it’s a symphony of engineering brilliance. Imagine a canvas of approximately 1600 square millimeters, meticulously etched with intricate patterns of silicon. But here’s the magic: this canvas isn’t a solitary masterpiece. It’s a dynamic duo—a tango of two silicon dies, seamlessly linked within the same package by a 10 terabytes per second connection. These slices of silicon perform in perfect harmony, dancing as if they were a single 208-billion-transistor chip.

Now, let’s peek behind the curtain. The B200 owes its prowess to TSMC’s cutting-edge N4P chip technology. This isn’t your run-of-the-mill silicon; it’s the stuff of digital dreams. The B200 flaunts a 6 percent performance boost over its predecessor, the Hopper architecture GPUs (like the H100). It’s like upgrading from a trusty bicycle to a supersonic jet.

But wait, there’s more! Wrapped around the B200 like a protective cloak is high-bandwidth memory (HBM3e, to be precise). Think of it as the secret sauce that reduces latency and sips energy like a well-behaved AI. This memory buffet boasts a generous 192 GB—a leap from the H200’s 141 GB. And the memory bandwidth? Brace yourself: it’s 8 terabytes per second (up from the H200’s 4.8 TB/s). That’s data whizzing through at warp speed, making sure your AI models never miss a beat.

So, next time you encounter the B200, tip your hat to this silicon maestro. It’s not just a chip; it’s a symphony of innovation, playing the soundtrack of our tech-driven future.

While chipmaking technology played a crucial role in bringing Blackwell to fruition, it’s the transformative capabilities of the GPU itself that truly set it apart. In a compelling address to computer scientists at last year’s IEEE Hot Chips conference, Nvidia’s Chief Scientist, Bill Dally, underscored that the key to Nvidia’s AI success lies in the strategic reduction of numerical precision in AI calculations—a trend that Blackwell seamlessly continues.

The predecessor architecture, Hopper, introduced Nvidia’s innovative transformer engine—a groundbreaking system designed to scrutinize each layer of a neural network and determine the feasibility of computing with lower-precision numbers. Hopper’s pioneering approach allowed for the use of floating-point number formats as compact as 8 bits. Utilizing smaller numbers not only accelerates computation and enhances energy efficiency but also demands less memory and memory bandwidth, while simultaneously reducing silicon usage.

Among the myriad architectural revelations about Blackwell, Nvidia unveiled a dedicated “engine” specifically designed to enhance the GPU’s reliability, availability, and serviceability. Leveraging AI-based diagnostics, this innovative system aims to preemptively identify and forecast potential reliability issues, thus maximizing uptime and ensuring seamless operation of massive AI systems for extended durations—crucial for the uninterrupted training of large language models over weeks at a time.

Furthermore, Nvidia has integrated systems within Blackwell to bolster the security of AI models and expedite data processing. These additions serve to fortify the integrity of AI endeavors and accelerate database queries and data analytics, enhancing overall efficiency and performance.

Notably, Blackwell also incorporates Nvidia’s fifth-generation computer interconnect technology, NVLink, which boasts an impressive bidirectional throughput of 1.8 terabytes per second between GPUs. This facilitates lightning-fast communication among up to 576 GPUs, setting a new standard for high-speed data exchange. In comparison, Hopper’s iteration of NVLink operated at half that bandwidth, highlighting the significant leap forward in interconnect capabilities ushered in by Blackwell.

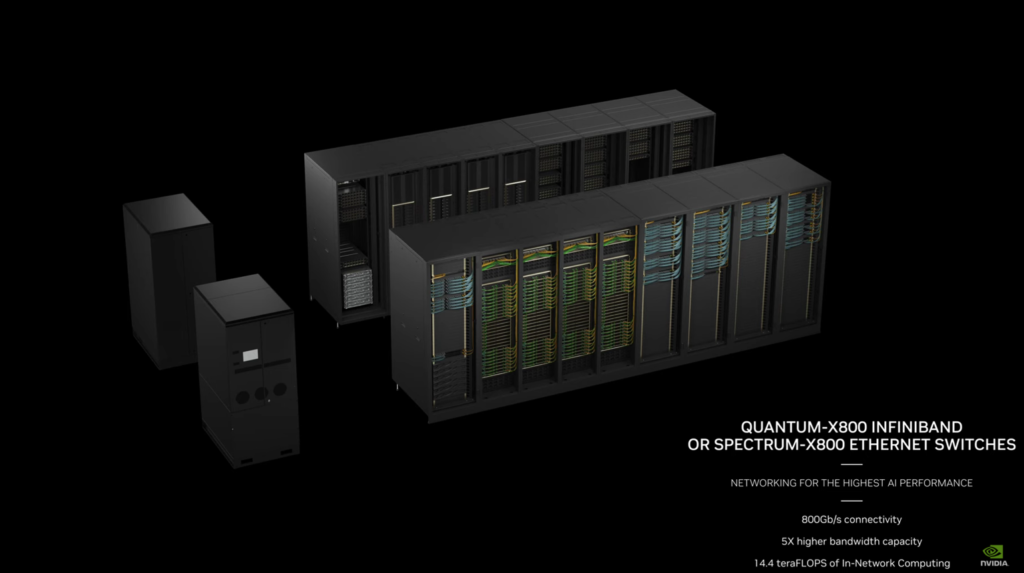

Expanding the capabilities even further, eight DGX GB200s can be interconnected via NVLink to create a formidable 576-GPU supercomputer known as a DGX SuperPOD. Nvidia projects that such a computing behemoth is capable of unleashing a staggering 11.5 exaflops of processing power, leveraging 4-bit precision calculations. With Nvidia’s Quantum Infiniband networking technology, the potential extends to systems encompassing tens of thousands of GPUs, promising unprecedented computational might and scalability.

Anticipate the arrival of SuperPODs and other Nvidia computing solutions later this year, ushering in a new era of high-performance computing. Concurrently, chip foundry TSMC and electronic design automation company Synopsys have announced plans to transition Nvidia’s cutting-edge inverse lithography tool, cuLitho, into full-scale production—a testament to the ongoing advancements and collaborations driving innovation in the realm of semiconductor technology.

Blackwell products: Set for broad adoption and integration across industries

Leading the charge are cloud service giants AWS, Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure, who will be among the inaugural providers offering Blackwell-powered instances. Joining them are esteemed members of the NVIDIA Cloud Partner program, including Applied Digital, CoreWeave, Crusoe, IBM Cloud, and Lambda, all poised to integrate Blackwell into their offerings. Sovereign AI clouds will also play a pivotal role in delivering Blackwell-based cloud services and infrastructure, with notable participants such as Indosat Ooredoo Hutchinson, Nebius, Nexgen Cloud, Oracle EU Sovereign Cloud, and Scaleway, to name a few.

For those seeking access to Blackwell-powered solutions through dedicated platforms, NVIDIA DGX™ Cloud emerges as a beacon, offering enterprise developers exclusive access to infrastructure and software tailored for building and deploying advanced generative AI models. Collaborations with AWS, Google Cloud, and Oracle Cloud Infrastructure are set to bring Blackwell instances to these platforms in the near future.

In the realm of hardware, industry titans Cisco, Dell, Hewlett Packard Enterprise, Lenovo, and Supermicro are gearing up to deliver a diverse array of servers built on Blackwell products. Complementing this lineup are an impressive roster of server manufacturers including Aivres, ASRock Rack, ASUS, Eviden, Foxconn, GIGABYTE, Inventec, Pegatron, QCT, Wistron, Wiwynn, and ZT Systems, all committed to ushering in the era of Blackwell computing.

But the impact doesn’t end there. A burgeoning network of software innovators, including Ansys, Cadence, and Synopsys—renowned leaders in engineering simulation—are harnessing the power of Blackwell-based processors to accelerate the design and simulation of electrical, mechanical, and manufacturing systems and components. This collaboration promises to empower customers with generative AI and accelerated computing, enabling faster time-to-market, reduced costs, and enhanced energy efficiency for their products.